Applying what we’ve learned so far (“Midterm” writing assignment)

Using the readings and other course materials we’ve looked at so far, apply an intersectional lens to the the idea of technological progress. In order to do this, you might draw on readings we’ve done and discussions we’ve had so far, and you might want to show how those readings apply to things that are still going on today, or talk about how technologies have changed, and how that has–and hasn’t–shifted power structures.

Your essay doesn’t have to be too long–write no more than 700 words (this equates to roughly 4-5 pages of double spaced text in a standard word processing program). Essays will be passed in by posting them as a comment on this blog post, and will be due on October 5 October 10th by 11pm (note the extended deadline).

A few more details: As you are writing your essay, resist the urge to simply say that nothing much has changed. The most important part of history is looking at change over time. Sometimes this change will not always be progressive. Sometimes things change for the worse. Or they get better and then change back. But they almost always change, and in these changes we can find some sort of lesson, if we look carefully and are clever about synthesizing new knowledge from the details we’ve taken in. Try to tease that out—the new lesson that you find in analyzing the course material and the change over time (and yes, the similarities over time too) that you see played out.

Remember that every essay should try to argue something new and support it with specific evidence from the texts and the course. So start out with a statement that isn’t obvious—maybe even one you’re not too sure about—and see if the evidence holds as you write, altering your argument as needed as you go along. Try to tell your readers something we wouldn’t have known or understood before reading your essay, even if we’d done all the same readings. Try to surprise us—maybe even yourself—with your new insight.

If you’re stuck figuring out what an argument or thesis statement should look like, go back over the readings that we’ve had in the course and try to write down what the argument is in each of those readings. That will help give you an impression of what a historical argument can look like. Sometimes the argument is explicitly stated, but sometimes it’s not and you have to try to infer what it is.

Note: Please LEAVE AN EXTRA LINE of space (hit ‘return’ or ‘enter’ twice) after every paragraph, because this system strips out indents and your paragraphs will run together in one solid block of text if you don’t leave an extra line. Also, your paper will not be visible as a comment immediately after you post it because I have to read and approve them. Because this website, unlike our class discussion board, can be viewed by anyone on the web, I will only make your post visible if you give me permission to do so–please let me know in your post if you do or don’t want me to make your post visible online. And, you can choose a pseudonym for your screen name–you do not have to use your real name.

What is progress and who gets to decide if technology has progressed? These questions seem like important ones to consider given that progress for one party might feel like regression to another. When it comes to technology, it seems a small group of technology creators and business people largely get to decide what progress is. People who disagree — either because they see problems created by technology or might be marginalized by the technology themselves — fight against that. This dynamic is important, because it allows different lenses to be involved in the discussion of defining technological progress. In keeping with intersectional theory, something can progress in one sense (pure, technical lines of code sense) and not progress or even regress in another sense (culturally or socially). We cannot determine if something is progress if we only look at one road. Furthermore, progress simply can’t be designed and coded on the back of regression and oppression.

By applying an intersectional lens to technological progress, it immediately becomes clear that progress is not a clean, linear path. When looking at the places where roads intersect, things become complicated and progression and regression are both present in different ways. Using the texts from the course that touch on medical technology, we can see an example of this. In a technological sense, progress in medical technology has been made in using machinery to retrieve information, do jobs, and tell us information. In a social sense, progress has not been made in how the institution of medical science interacts with the humans who need medical care. In fact, technologies may take advantage of and build upon systems of oppression. As Dr. Diedre Cooper says, the oppression that enabled the abuse of enslaved Black women by slave owners set the stage for the racism that is still baked into the healthcare system to this day.

This is just one example of the symbiotic relationship between technology and the world that technologies exist within. It also shows that technology designed with the intent of progress in one sense can actually lead to regression in another sense. Another example is Joy Buolamwini’s work around facial recognition and racial justice: the technology of facial recognition cannot truly be considered progress when it furthers race based discrimination. Yet another example can be seen in our course reading of “Decoding Femininity in Computer Science in India”, where we see that cultural expectations of women in India factors into who gets to create technology which influences the technology that gets built. This same sentiment was shared by Ben Barres in our first week of readings.

Through our readings so far this semester, my biggest takeaway has been how, when it comes to technology, nothing can stand on its own – no piece of technology, no policy, no idea. It will be working with or working against other systems around it. As Dr. Ruha Benjamin said in her interview with Janine Jackson, technology is “part of the pattern” when it comes to systemic oppression. Injustice has to be understood in more than a simple sense by those creating technology for that technology to actually help dismantle that system. Reading and listening to the work of scholars like Dr. Benjamin, Dr. Cooper, and Dr. Gilliard is so refreshing and exhilarating because it has helped me, as a UX designer in the tech industry, form a view of what I think tech and design need to really do to live up to the promises the industries make (and largely aren’t delivering on) today. Without stepping back and taking time to understand the world, including systems of oppression, we’re doomed as technologists to keep creating things built on oppression while believing they can build a better world. It’s a maddening, twisted ball of yarn that feels like a sociological problem but also like a design, product, and engineering problem.

The “new” insight that I would offer at the midterm of our semester is that the mainstream, professional definition of technological progress needs to be overhauled to learn from scholars like the ones we’re reading. I know this might not seem like a new idea, but it feels like a big, important topic to me because the gulf between professional technologists and scholars studying technology can feel like a big one, and I think overhauling how we talk about and evaluate technological progress outside of the classroom is critical. I have read recently about some of this work like the #TechWontBuildIt and #DesignJustice movements in Sasha Costanza-Chock’s Design Justice. I want to be part of movements like these as a professional tech worker. As our HIST 580 course readings have shown me, the idea of social progress and justice simply can’t be relegated to the side and be a promise of something that will inevitably flow from the technology. It has to be the center. It has to guide the technology from the start — that feels like the only way to shift power structures rather than building on them.

Through the civil rights and women’s rights movements, Black women have gained rights they did not have before. While discriminating based on race and sex is not outright acceptable, black women in particular still face certain social issues that have no sign of ending. One of the ways Black women are targeted is through technology.

The bodies of black women slaves were utilized as experiments for hundreds of years to find a proper treatment to unknown conditions or disease. Peter Mettauer, a surgeon and gynecologist, conducted an experiment on both a black female slave and white female in order to find a cure for Vesicovaginal fistula. He carried out the same procedures for both women and the white female was cured while the black female was not. His conclusion was that the black woman was not cured because she would not stop having sex. The black woman was more than likely forced to be a part of this experiment and additionally she had no control over her own body as she is a slave. She could not control who she wanted to have sexual relationships with. Mettauer’s conclusions displays how technology was utilized to place the blame on Black women’ behaviors to further attack their image.

Black women today are not forced to be a part of experiments, but they still deal with parallel situations. For example, black women have the highest infant and maternal mortality rate so therefore have the highest abortion rate. Society still places the blame on high abortion rate among black women based on their “lack of knowledge” like not using birth control or control their libido. Yet what society should focus on is why the black women have the highest infant/maternal mortality rate and what exactly is happening behind the scenes in the hospitals for this trend to be present.

Blacks and women typically had a difficult time finding jobs compared to white males. It is now illegal for employers to discriminate based on race or sex. This, however, leaves black women stranded as seen in the DeGraffenreid v. General Motors case. DeGraffenreid believed she was discriminated for being a Black Woman but General Motors hires blacks and women so therefore her case was dismissed. Black men were typically hired for industrial/maintenance jobs while white women were hired for secretarial/office jobs. This shows how although black women have gained rights through the civil and women’s rights, they are a specific group that is still discriminated for being a part of this intersection between race and sex.

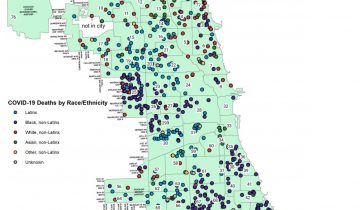

Very recently, the Black Lives Matter movement has gained massive momentum, it is clear that Black women lives are overlooked. In one of Dr.Crenshaw ‘s presentation on intersectionality she started naming the names of black people killed from police brutality and unsurprisingly the names of the black women victims were the least familiar with the audience. These black women have little to no news coverage compared to black males. This shows how the Black Lives Matter is counterproductive as they pay little to no mind of the lives of Black women. George Floyd’s death sparked riots and protests universally within days in comparison to Breonna Taylor’s death. Her death only received attention months after her initial passing and gained little traction towards receiving justice. Floyd’s last words “ I can’t breathe” was popularized by multiple media sources ranging from the news to Facebook and social media platforms. For weeks , one could not open Facebook without seeing a George Floyd related post. Facebook’s algorithm picked up on this event but only popularized Breonna’s case after the protest began,

A subtle way in which technology still targets black women is through facial ID applications. Three companies: IBM, Microsoft, and Face++ they all perform worst on females with darker complexion compared to white people and black males. While this may not be a major issue as of right now, facial ID will become a core method of security in the near future. This leaves black women vulnerable to identity theft compared to their white and male counterparts. If issues like these are not resolved, black females may continue to receive unfair treatment.

Technological progress has been a core element of humanity, especially since the onset of the industrial revolution. Advancements taken by themselves are often renowned for their technical achievements, while flaws show themselves later in the application of such science. Take medical science – advancements made on enslaved women’s bodies were printed in journals and celebrated for moving the medical community forward, while at the same time erasing the patients and women who were used to make such progress. This erasure has allowed for the racism and sexism related to those black women to live on within medical science. Technology provided a framework that encapsulated racial and gender issues, granting them a long and dangerous life. This is why an intersectional view on technology is critical if we are to move forward in our digital world, because as the complexity of technology increases, so does the complexity of solving the social issues it carries.

In her book Medical Bondage, Dr. Cooper Owens describes how white people, and especially doctors, viewed black women as physically stronger, including the apparent inability to feel pain. Critically, “the black female body was further hypersexualized, masculinized, and endowed with brute strength because medical science validated these ideologies”. When reading about medical science from the 19th century, it is easy to see them as past horrors with little in common with the present. However, these ideas about black women have led to black babies dying at twice the rate of white babies in the 21st century. A personal example of how modern day racism against black women shows itself in modern medical science was documented by Tressie McMillan Cottom. Nurses and doctors refused to accept her exclamations of pain as real and ignored her own descriptions of her symptoms, demonstrating similar biases to those of the antebellum period.

Another current-day example can be found in the very recent case of hysterectomies being performed on women of color detained in ICE concentration camps. We see doctors build their science on enslaved black women, and once that science is established, women of color continue to be the testing ground or the subject of abuse by that very science. The framework of medical technology has enabled the overarching issue of power being wielded over those considered poor or somehow “lesser” humans, in often cases women of color. Intersectional analysis of medical technology alone reveals centuries of bias and discrimination causing very real and unacceptable damage to communities. The example of ICE sterilizations clearly demonstrates xenophobia being used to justify medical atrocities.

This result of technology providing damaging, long-lasting frameworks is that it is more important than ever to recognize those biases and flaws when attempting to make technological progress. Medical issues are a major example because they affect a huge number of people, and the complexity of the healthcare system means that the racism and sexism within have evolved much more than they have been eradicated. With this in mind, consider digital technologies. Services like Google and Amazon rely on massive customer bases and essentially a monopoly to provide their most powerful services. When technologies as wide-reaching as these have racial and gender biases built in, they will not only provide a biased system, but in many cases these digital tools actually propagate bias. The video “Gender Shades” by Joy Buolamwini demonstrates a clear racial and gendered bias in facial recognition algorithms. And like Dr. Chris Gilliard states in his talk, “if you are not at least attempting to design bias out, then by nature you’re designing it in”. In many cases, there is a great need for an intersectional analysis, because advancements in digital technology are continually applied by major institutions with seemingly little concern for external consequences.

However, this is not meant to be an essay on despair. The criticality of intersectional analysis in digital technologies may also lead to positive change, even if initially the change is dangerous because of the scale of the technology. The internet and other systems allow for these issues to be more widely understood, and more than ever people are aware of them. Perhaps digital technology can actually provide the tools needed to improve on social progress associated with technology in general.

Recognizing People but Not Enough

Our society has made impressive technological advancements but has yet to fix many social justice issues. This often means that technology can be detrimental to certain groups of people. Aspects of one’s identity build up to create a measure of privilege. It is not one identity or another, but a culmination of things that can define how the world treats a person. In the past, technology exploited certain groups of people for its advancement. This has improved slightly with time, but the present emerging issue is technological advancement too quick to include all kinds of people.

The societal treatment of an individual can be better understood when considering the term “intersectionality.” Intersectionality describes how certain identities will overlap and cause individuals more social injustice and discrimination than with only one of those identities. This term was coined by Dr. Kimberlé Crenshaw in her article, “Demarginalizing the Intersection of Race and Sex,” when describing the struggles of black women who face both racism and sexism. A specific example was of a woman fighting a discriminatory hiring battle. The court would only acknowledge the case as a racism or sexism issue but not both. So, when considering an individual or a group of people, it is important to consider all aspects of the identity and how society might treat them.

Looking at a definition of technology reveals where there are spaces for discrimination. Technology could be defined as the practical application of scientific knowledge meant to benefit individuals or society as a whole. However, technology does not always benefit everyone equally, and it sometimes comes at other people’s expense. Scientific knowledge is an accumulation of ideas and influence from countless people doing extensive experimentation. Acknowledging the past behind scientific breakthroughs and the resulting innovations give technologies a significant weight. Dr. Cooper Owens has written a book called Medical Bondage that discusses the history behind modern gynecology and pioneering doctors like Dr. J. Marion Sims. Gynecology is a very important science to have, however much of this knowledge came from dangerous experimentation often performed on the most vulnerable and degraded people of the time: slave women. This demographic was dehumanized by much of the culture in the Western world. The doctors and scientists of this time had beliefs of inequality that became apparent in their work. A similar, modern example would be of the flawed Dalkon Shield IUD that lead to miscarriages and emergency hysterectomies. This birth control was actively pushed on lower income women, and when it was made illegal in the US it was sent overseas to underdeveloped countries. (Stanley) This type of abusive research is less frequent in the present, but it is not completely eradicated.

Certain technologies of today tends to favor certain demographics over others, which acts as technological discrimination. Stories of sensors not registering darker skin (Jackson), facial recognition being unable to identify black women (Feloni), or AI enforcing stereotypes through predictive search algorithms (Gillard) all call into question how this discrimination is getting into technology. Technology is meant to be objective, but it only works with given information. As discussed earlier, the teams behind the science and innovation that is creating technology can have biases and ingrained beliefs that are harmful to potential users. If the people working on a technology all have similar backgrounds, there may be shared biases that are never acknowledged as wrong. Joy Buolamwini, an MIT researcher, conducted a study about facial recognition flaws and shared it. This caused IBM to address issues within their programs and improve them significantly in a relatively short amount of time. (Feloni) The issue was easily fixed but not initially seen as significant enough to address. This shows how easy it is to exclude people if no one contributing to the technology is going to acknowledge diversity.

Technology is becoming an increasingly bigger part of today’s society. However, if the technology is only progressing for certain people, the rest of the population is getting left behind and ignored. When people are set apart and below others, they will be dehumanized and abused like Sims’ patients and the women given dangerous birth control. In the past, people were allowed to dehumanize certain groups of people. Today, society has progressed enough to recognize the flaws of the past, but not enough to recognize that discrimination is still apparent. Progressing biased technology faster creates a society that becomes increasingly more difficult for disadvantaged people. Diversity and inclusivity must be present to mitigate the biases that are negatively affecting the technology field and society itself.

Works Cited

Crenshaw, Kimberle () “Demarginalizing the Intersection of Race and Sex: A Black Feminist Critique of Antidiscrimination Doctrine, Feminist Theory and Antiracist Politics,” University of Chicago Legal Forum: Vol. 1989: Iss. 1, Article 8.

Gillard, Chris. “From Redlining to Digital Redlining.” Academic Technology Expo. Academic Technology Expo, 2018, Norman, University of Oklahoma.

Jackson, Janine, and Ruha Benjamin. “Black Communities Are Already Living in a Tech Dystopia.” Fair.org, CounterSpin, 15 Aug. 2019, fair.org/home/black-communities-are-already-living-in-a-tech-dystopia/. Accessed 10 Oct. 2020.

Feloni, Richard. “An MIT Researcher Who Analyzed Facial Recognition Software Found Eliminating Bias in AI Is a Matter of Priorities.” Business Insider, Business Insider, 23 Jan. 2019, http://www.businessinsider.com/biases-ethics-facial-recognition-ai-mit-joy-buolamwini-2019-1.

Owens, Deirdre Cooper. Medical Bondage: Race, Gender, and the Origins of American Gynecology. The University of Georgia Press, 2018.

Stanley, Jenn. “Lorette Ross on the Dalkon Shield Disaster.” CHOICE/LESS, performance by Loretta Ross, season 1, episode 1, Rewire Radio, 7 June 2017, rewirenewsgroup.com/multimedia/podcast/choiceless-backstory-episode-2-loretta-ross-dalkon-shield-disaster/.

Gender and Technology has changed in many ways and forms. Whether it’s medical, scientific, technical etc. there has been a ton of progress made all while still showing similar themes of power structures and oppression in this technological change.

Starting with Medical Bondage by Deirdre Cooper Owens, the first women’s hospital in the United States was on a small slave farm in Alabama around 1844. Owens talks about how this white doctor, Sims, used women slaves for experimental training. In the medical gynecology field, black women were used to train on for surgical procedures. Then when successful, they would perform the same surgeries on white women. These doctors believed that black women had a higher tolerance for pain than white women and that their bodies were somehow different but would still practice on their bodies and then use what they learned on white women’s bodies (which actually proved that they are exactly the same).

Similarly, moving forward about a hundred years in 1932 the Tuskegee Syphilis Study was conducted specifically on black men. These men were left untreated for syphilis in a study that lasted 40 years. Once again medical technological studies used people who they basically considered “disposable” to advance. We start seeing a theme in technology in the medical field, one that advances while using gender and people of color as experiments to advance.

In present times some same ideologies continue to carry on. In another article we read “I Was Pregnant and in Crisis. All the Doctors and Nurses Saw Was an Incompetent Black Woman” by Tressie McMillan Cottom, she shares her experience as a black women getting treated by doctors. She was not taken seriously and ended up losing her daughter. To this date, there are significant disparities between pregnant black women and white. Even with all of the technological advances in health care, gender and skin color are not treated the same.

In terms of technological advances such as artificial intelligence, e-commerce, and advertising the intersectionality of gender and race plays a role. An MIT student, Joy Buolamwini did extensive research on artificial intelligence classifying gender and demographics called Gender Shades. She wanted to see how different gender classification systems worked across different faces and skin types. After evaluating IBM, Microsoft, and Face++ she concluded that all companies performed better on males than females and lighter subjects than on darker subjects. One reason why this happens is due to the lack of diversity in the images that are used as training sets. The amount of error that these systems have is still unacceptable and preventable.

For e-commerce and advertising, Professor Chris Gilliard’s lecture on Digital Redlining talks about Amazon and how they provide same day shipping to a large area on a map except in the middle where it happens to be a black neighborhood. Amazon, being a giant e-commerce site that uses AI and advanced code should not be making these types of mistakes. Whether this was intentional or not this is another technological advance that is contributing to the oppression of gender and intersectionality.

Finally, another example of gender intersectionality and technology being used to push an existing power system is online advertising. Whether it’s on Google or Facebook or any other platform, all sorts of advertisements including jobs advertisements will display to users based on their gender along with other information such as race and geographic location. In Professor Latanya Sweeney’s study, “Discrimination in Online Ad Delivery,” she exposes the discriminations in ads. One test she did was searching black and white women’s names and found that black women’s names triggered background checks and police report ads meanwhile that did not happen for white women’s names. The same issue also happens with the way job ads are shared on Facebook, certain jobs only display to specific genders and demographics.

In conclusion, when you look at gender and technology through out history and the present there have been significant life changing advances. At the same time, very little advance on how gender (and intersectionality) comes into play with these evolving technologies. Technology is always advancing while the inclusiveness of gender is advancing at a much slower rate. About six or seven weeks ago I would not have been able to put gender and technology together or understand how significant gender in technology is. From men discrediting women’s technological advances and not giving them a space to be heard to genders being experimented on for both medical and technical (online/social media) purposes.